I Turned Down an AI PM Job Because I Couldn't See a Moat

I got to the final round of an AI PM interview last month. The company built AI education tools—teaching people how to use AI for their specific roles. Sales teams, marketing teams, product teams. Each role had custom prompts, workflows, and playbooks.

Early-stage startup. Experienced founding team. Solid funding. Growing fast.

They wanted to move forward. I said no.

Not because of the team. Not because of the product. Not because I had a better offer.

I turned it down because halfway through the interview process, OpenAI released their usage statistics.

The data that changed everything

Between my second and third interview, OpenAI published data on what people use their platform for.

Content creation: #1. Education and learning: #2.

The exact use cases this company was building for.

Then, a week later, OpenAI started rolling out role-specific playbooks. How to use ChatGPT for sales. How to use it for marketing. How to use it for product management.

I was sitting in an interview with a company teaching people how to use AI for specific roles, while OpenAI was publishing guides on how to use AI for specific roles.

That's when it clicked.

This company didn't have a product problem. They had a moat problem.

They were building exactly what the platform was about to give away for free. And the platform had something this startup could never match—data from millions of users showing them exactly what features to build next.

I couldn't answer a simple question: What does success look like for me in this role if we're one update away from irrelevance?

Building on rented land

The Platform Trap is simple: the same platforms powering your product are also your biggest competitors.

A single API update can replicate 80% of your product's value.

You're not building a product. You're building on rented land.

And I kept finding examples of companies that learned this the hard way:

Kite was an AI code completion startup. They were early to the space, well-funded, smart team. Then they went head-to-head with GitHub Copilot. Microsoft had better data, better distribution, and could afford to subsidize the product. Kite didn't stand a chance. They shut down.

Jasper AI raised over $100 million building AI marketing tools. They had real traction. Then ChatGPT shipped similar capabilities and commoditized their core value overnight. Their differentiation evaporated.

I read about a Fortune 500 company that added an AI chatbot to their app. It hit 100,000 users in three months. Execs celebrated. Six months later, usage collapsed. Why? Competitors cloned the feature in weeks. The bot didn't solve a core business pain. Now it's a dead tab in the app that nobody clicks.

Mid-interview, I'm on my phone googling these examples and thinking: This could be us in 12 months.

That's when I realized I couldn't take the role. Because if I can't see how we avoid the Platform Trap, I can't see how I succeed.

What a real moat looks like

After this experience, I started looking for the pattern. What separates companies that get crushed by platform updates from the ones that thrive?

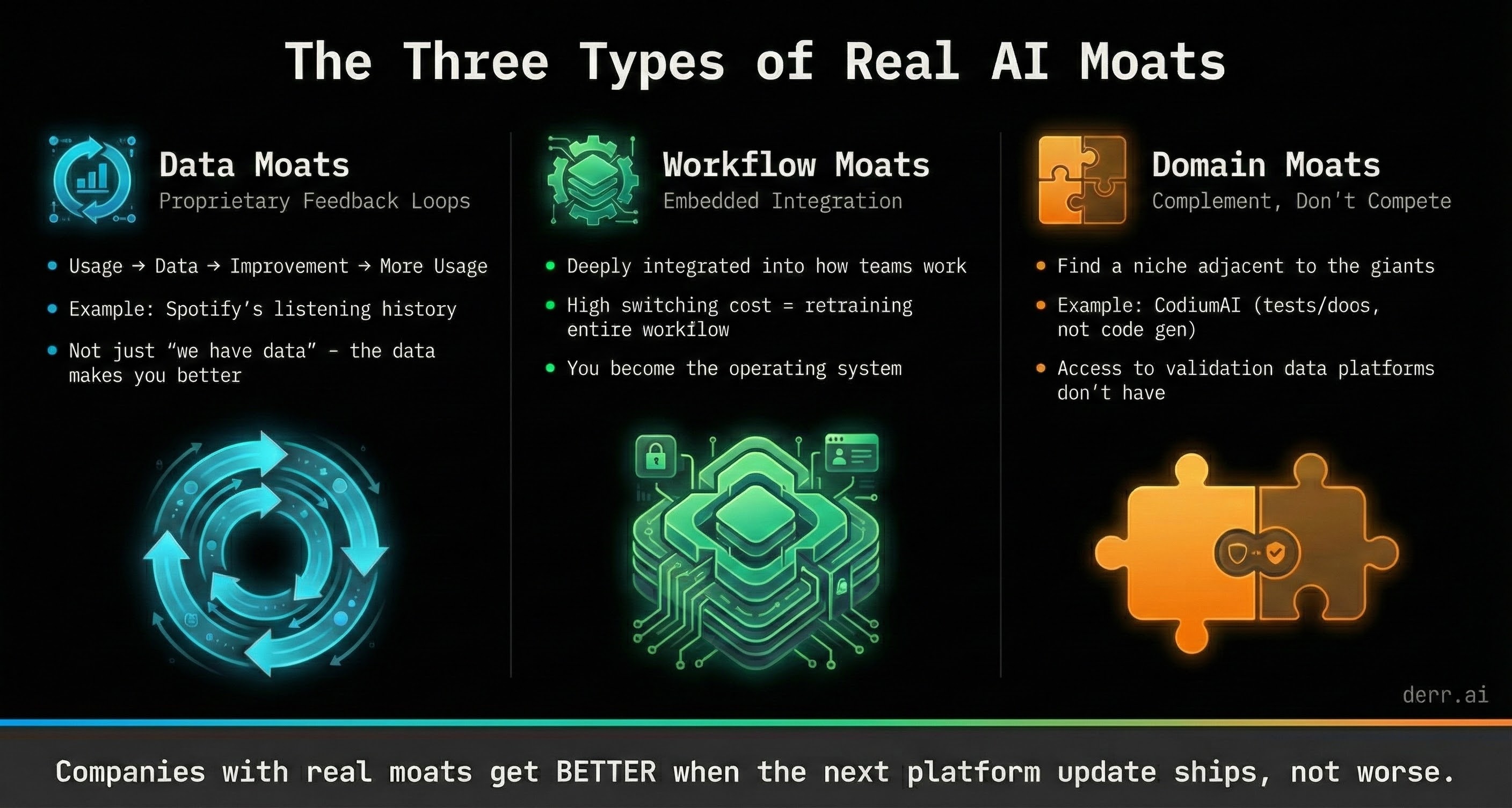

Three types of moats kept showing up.

1. Data Moats: Proprietary feedback loops

This isn't just "we have data." Everyone has data.

It's: we use that data to improve, and that improvement gets us more data, which makes us even better.

There's a legal tech startup that built an M&A document review tool. Their wedge was compressing review time for mergers and acquisitions. But here's the key: they built a proprietary feedback loop where lawyers corrected the AI's outputs. Every correction made the system smarter. Every week, it got better at their specific use case.

They were acquired for nine figures in 18 months.

Or look at Spotify. Their moat isn't the 100 million song library—that's public, anyone can license it. Their moat is your personal listening history. Every song you've skipped, saved, or added to a playlist. That's what powers Discover Weekly. That's data no competitor can replicate.

The pattern: usage generates proprietary data, which improves the product, which drives more usage.

2. Workflow Moats: Embedded integration

This is when your AI becomes so deeply embedded in how people work that it's the default operating system. It's not a feature they use. It's a workflow they can't give up.

The switching cost isn't about the product. It's about retraining how the entire team works.

This isn't just about integration—it's about becoming irreplaceable in the workflow.

3. Domain Moats: Complement, don't compete

Don't fight the giants head-on. Find a complementary niche.

CodiumAI is a perfect example. They didn't try to compete with GitHub Copilot on code generation. That's a suicide mission. Instead, they focused on the tedious work around code—writing tests and documentation.

They complemented Copilot, didn't compete with it. They raised $65 million.

The pattern I kept seeing: companies with real moats don't hide from platform updates. They get better when the next major release ships, not worse.

The questions that matter

In that interview, I asked about product roadmap, team structure, go-to-market strategy. Standard PM questions.

Wrong questions.

Here are the three questions I ask in every AI PM interview now:

"What happens to your product with the next update?"

If the answer is "We'll stay ahead by..." or "Our prompts are better," that's a red flag.

If the answer is "We get better because we're building on top of it" or "Our moat is orthogonal to model improvements," that's interesting.

If platform improvements make you worse, not better, you're in the Platform Trap.

"If OpenAI built this exact feature tomorrow, what would you still have?"

I'm listening for:

- A proprietary data flywheel

- Deep workflow integration that's hard to rip out

- Domain-specific ground truth they can't access

- Complementary positioning

Red flags:

- "Our UX is better"

- "We got there first"

- "We have a strong brand"

Those aren't moats. Those are feature advantages. And feature advantages evaporate overnight in AI.

"What data are you collecting that makes your AI better over time?"

I'm listening for specifics. Like the legal tech example: lawyers correcting outputs, feeding it back into the model, creating a compounding loop.

Or like Spotify: every user action creates data that makes recommendations better, which drives more engagement, which creates more data.

Red flags:

- "User feedback" (too vague)

- "We're collecting everything" (no strategy)

- "We'll figure that out later" (no moat)

If you can't articulate the flywheel, you don't have one.

What I learned

Turning down that role taught me something: in AI, the moat isn't optional. It's the job.

If you can't articulate the moat, you're not building a product. You're renting shelf space from a platform that's about to kick you out.

The companies that will win in AI aren't the ones with better prompts or slicker UX. They're the ones with structural advantages the platforms can't replicate.

Data flywheels. Workflow integration. Complementary positioning.

The rest is just features. And features evaporate overnight.

Are you seeing this too? I'm curious what red flags you look for when evaluating AI products or roles.

Have you walked away from an opportunity because you couldn't see the moat? Or found a company that actually has one?

Hit reply—I'd love to hear what you're learning.